The Ethical AI in Mental Health Initiatives: Ensuring Equitable Access

The Potential of AI in Mental Healthcare

AI-Powered Mental Health Assessments

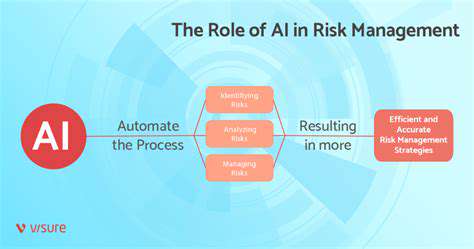

Artificial intelligence (AI) is rapidly transforming various sectors, and mental health is no exception. AI-powered tools can offer quick and accessible mental health assessments, potentially identifying individuals at risk for various conditions. These assessments can provide preliminary data that can inform clinicians and support faster intervention for those in need. This can lead to more efficient and targeted interventions, especially in areas with limited mental health resources.

The speed and scale at which AI can process data are unparalleled, allowing for the potential to identify patterns and risks that may be missed by human clinicians. The ability to analyze vast datasets of patient information, including text, images, and physiological data, could significantly improve diagnostic accuracy and efficiency.

Personalized Treatment Plans

AI algorithms can analyze a patient's unique characteristics, including their medical history, lifestyle, and emotional responses. This personalized approach can lead to the development of tailored treatment plans. These plans can be optimized over time as the patient's needs and responses evolve, providing a more dynamic and effective therapeutic experience. By continuously monitoring and adjusting the treatment strategy, AI can ensure the most effective path toward recovery.

Enhanced Accessibility and Affordability

One of the significant benefits of AI in mental health is the potential to increase accessibility and affordability of care. AI-powered chatbots and virtual assistants can provide initial support and guidance, acting as a first point of contact for individuals seeking help. This can significantly reduce the burden on traditional mental health services and provide support to those who may have difficulty accessing in-person therapy. Furthermore, AI-driven tools can make mental health services more affordable by reducing the need for extensive and expensive human intervention.

Improving Mental Health Monitoring

AI can play a crucial role in monitoring the mental well-being of individuals over time. By analyzing various data points, such as patterns in mood, sleep, and activity, AI systems can detect subtle changes that might indicate a worsening condition. Early detection of these changes can allow for timely intervention and prevent a decline in mental health. These tools can help individuals and clinicians track progress and adjust treatment strategies as needed.

Ethical Considerations and Future Directions

While the potential of AI in mental health is immense, ethical considerations are paramount. Ensuring the privacy and security of sensitive patient data is critical. Careful development and rigorous testing of AI algorithms are essential to prevent bias and ensure fairness in their application. The future of AI in mental health will involve ongoing research and development, focusing on refining the technology and expanding its utility. This will include careful consideration of the ethical implications and the development of robust regulatory frameworks to ensure responsible use.

Bias Detection and Mitigation in AI Algorithms

Identifying Biases in Algorithmic Systems

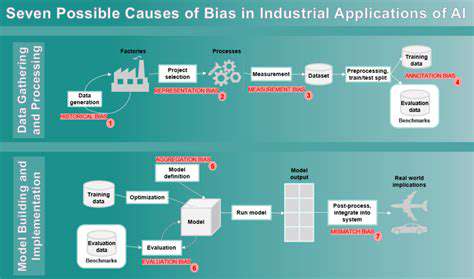

Bias in algorithmic systems is a significant concern, particularly in areas like hiring, loan applications, and criminal justice. These systems, often trained on vast datasets, can inadvertently reflect and amplify existing societal biases, leading to unfair or discriminatory outcomes. Understanding the potential sources of bias is crucial to developing effective mitigation strategies.

Bias can manifest in various forms, including data bias, algorithm bias, and evaluation bias. Data bias arises when the training data itself reflects societal prejudices, leading to skewed results. Algorithm bias stems from the design and implementation of the algorithms themselves, potentially amplifying existing inequalities. Finally, evaluation bias can occur when the metrics used to assess the system's performance fail to account for the potential biases that might be present.

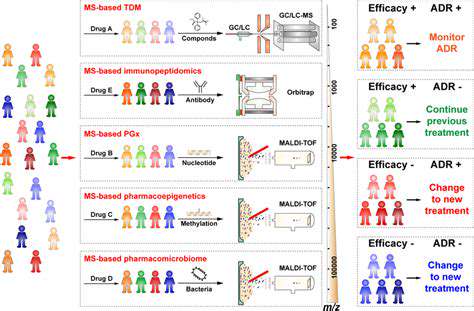

Techniques for Bias Detection

Several techniques can be employed to detect and analyze bias in algorithmic systems. These range from statistical methods to qualitative assessments, allowing for a multi-faceted approach to identifying potential issues. One common approach involves comparing the performance of the system across different demographic groups. This comparison can highlight disproportionate outcomes and reveal potential biases in the system's decision-making processes.

Another valuable technique is the use of adversarial examples. These are carefully crafted inputs designed to elicit specific behaviors from the system. By analyzing the system's responses to these examples, we can identify potential vulnerabilities and areas where bias might be present. Analyzing the distribution of data points within the training set can also reveal significant disparities. For instance, an imbalance in the representation of certain demographic groups within the data might indicate a potential bias that needs to be addressed.

Finally, human review and expert analysis can play a crucial role in identifying subtle biases that might be missed by purely automated methods. Expert opinions can provide valuable context and insight into the potential impact of the system on different groups.

Mitigation Strategies and Best Practices

Addressing bias in algorithmic systems requires a multifaceted approach encompassing data preprocessing, algorithm design, and evaluation methodologies. Techniques like data augmentation, re-weighting, and data cleaning can help mitigate the effects of biased data. These methods aim to reduce the impact of skewed representations and create a more balanced dataset for training.

Furthermore, the design of algorithms themselves can be modified to actively counteract bias. Techniques such as fairness-aware learning algorithms and robust optimization methods are being developed to create systems that are less susceptible to discriminatory outcomes. Developing appropriate evaluation metrics that consider fairness and equity is critical. These metrics must be carefully designed to assess the impact of the system on different groups, ensuring that fairness is consistently considered.

Transparency and accountability are essential components of any successful bias mitigation strategy. Clearly documenting the data sources, algorithms, and evaluation metrics used is crucial for ensuring that the system's decisions are understandable and justifiable.

Promoting Collaboration and Fostering Trust

Building Trust Through Transparency

Transparency is paramount in fostering trust, especially in a sensitive domain like mental health. Open communication about the AI's limitations, decision-making processes, and data usage is crucial. Users need to understand how the AI system functions and how their data is being handled. This includes clear explanations of the AI's strengths and weaknesses, ensuring users are aware of potential biases and limitations. Detailed privacy policies, readily accessible and easily understood, are essential to build user confidence and demonstrate a commitment to ethical practices. This transparency extends to the data sources used to train the AI model, highlighting the diversity and representativeness of the data set. Such transparency builds trust and facilitates informed consent.

Moreover, actively soliciting feedback from users about their experiences with the AI system is vital. Creating channels for users to share their concerns, suggestions, and experiences helps identify areas for improvement and demonstrates a commitment to continuous improvement. Regularly updating users on the progress made in addressing their feedback and concerns reinforces trust and demonstrates responsiveness to user needs, making them feel heard and valued.

Collaborative Partnerships for Ethical Development

Developing ethical AI in mental health requires a multi-faceted approach, involving collaboration between various stakeholders. This includes partnerships with mental health professionals, researchers, patient advocacy groups, and policymakers. Collaboration ensures a holistic understanding of the needs and challenges within the mental health sector and facilitates the development of AI systems that are truly beneficial and ethically sound. This collaborative environment ensures the AI systems are tailored to the specific needs of diverse populations, avoiding potential biases and ensuring equitable access to mental health resources.

Engaging with mental health professionals throughout the development process is crucial. Their expertise in understanding patient needs, identifying potential risks, and ensuring the AI system complements existing practices is essential. Incorporating their perspectives, feedback, and insights directly into the design and implementation stages fosters a system that aligns with best practices and promotes positive impacts on mental health care.

Fostering Interdisciplinary Collaboration and Research

The development of ethical AI in mental health necessitates interdisciplinary collaboration between computer scientists, psychologists, ethicists, and other relevant professionals. This multidisciplinary approach ensures a comprehensive understanding of the ethical, social, and technical implications of AI in mental health. It enables the development of AI tools that are not only technologically advanced but also align with ethical principles and best practices for mental health care.

Rigorous research is essential to evaluate the effectiveness and safety of AI tools in diverse populations. Conducting large-scale, well-designed studies, in collaboration with mental health professionals, will help determine the impact of these tools and identify any potential biases or adverse effects. The research should be transparent, reproducible, and rigorously evaluated by independent experts, ensuring that all findings are credible and reliable.

Further research should investigate the potential of AI to address specific mental health needs, including culturally sensitive interventions and support for marginalized communities. Collaboration with these communities is paramount for developing AI systems that cater to their unique needs and avoid perpetuating existing inequalities.

Evaluating the long-term impact of AI in mental health is crucial to ensure ongoing ethical considerations. Regular assessments and revisions of AI systems will be necessary to keep up with advancements in mental health knowledge and ensure the continued safety and efficacy of AI tools. This requires ongoing monitoring of the system's performance, continuous evaluation of its impact, and proactive adaptation to evolving ethical considerations.

This process requires close collaboration with mental health professionals and policymakers to ensure the AI aligns with best practices and avoids unintended negative consequences.

Open discussions about the ethical implications of AI in mental health will be necessary to ensure the development of trustworthy and responsible systems.

Read more about The Ethical AI in Mental Health Initiatives: Ensuring Equitable Access

Hot Recommendations

- Customized Sleep Schedules: AI Driven for Sustainable Rest

- Crafting a Personalized Productivity Plan for Mental Clarity

- Sustainable Self Compassion: Cultivating Kindness Towards Your Mind

- Sustainable Productivity Hacks for the Busy Professional

- Sustainable Wellness for Parents: Balancing Family and Self Care

- Data Informed Self Care: Designing Your Personalized Wellness Strategy

- Sustainable Wellness for a Purpose Driven Life

- AI Assisted Mindfulness: Personalized Meditations for Deeper Practice

- Building Inclusive Mental Health Services: Key Initiatives

- AI Powered Self Care: Customizing Your Routine for Maximum Impact