The Human Touch: How AI Enhances, Not Replaces, Mental Health Support

Ethical Considerations and the Future of AI in Mental Health

Bias in AI Algorithms

A significant ethical concern surrounding AI in mental health is the potential for bias in algorithms. These algorithms are trained on data, and if that data reflects existing societal biases, the AI may perpetuate and even amplify those biases. For example, if a dataset predominantly features patients from a specific socioeconomic background or with certain diagnoses, the AI may be less accurate or even discriminatory towards individuals from other backgrounds. This could lead to misdiagnosis, inappropriate treatment recommendations, or unequal access to care, highlighting the crucial need for diverse and representative datasets to train these AI systems.

Furthermore, the lack of transparency in some AI algorithms makes it difficult to identify and address potential biases. Understanding *how* an algorithm arrives at a particular conclusion is essential for ensuring fairness and accountability. Without this understanding, it becomes challenging to determine if an outcome is truly based on sound clinical reasoning or if it's influenced by embedded biases within the data or the algorithm itself.

Data Privacy and Security

The use of AI in mental health necessitates careful consideration of patient data privacy and security. AI systems often require access to sensitive personal information, including medical records, communication logs, and potentially even social media data. Robust security measures are paramount to prevent unauthorized access, breaches, and misuse of this information. This includes implementing strong encryption protocols, access controls, and regular security audits to safeguard patient confidentiality and maintain trust in these systems.

Furthermore, clear and transparent data policies must be established, outlining how patient data will be collected, stored, used, and protected. These policies need to be readily accessible and understandable to patients, ensuring they have control over their information and understand the implications of its use.

Transparency and Explainability

For AI systems to be ethically sound in mental health applications, their decision-making processes must be transparent and explainable. Patients and clinicians need to understand *why* an AI system reaches a particular conclusion or recommendation. This transparency fosters trust and allows for human oversight and intervention when necessary. Without explainability, it's difficult to assess the validity of AI-driven diagnoses or treatment plans, potentially leading to misinterpretations or misuse.

Accountability and Responsibility

Determining accountability when AI systems make errors or misjudgments in mental health contexts is a crucial ethical challenge. Who is responsible – the developers of the AI, the clinicians using the system, or the patients themselves? Establishing clear lines of responsibility and mechanisms for addressing errors will be essential to maintaining public trust and ensuring the safe and effective integration of AI into mental health care. This includes developing procedures for addressing complaints and ensuring appropriate remedies for harm caused by AI-related errors.

The Role of Human Clinicians

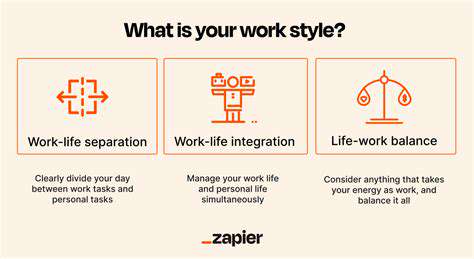

AI tools should not replace human clinicians but rather augment their capabilities. The future of AI in mental health lies in its collaboration with human expertise, not its complete substitution. Clinicians need to develop expertise in interpreting AI output, understanding its limitations, and utilizing AI insights to enhance their own clinical judgment. A crucial aspect of this is training and education for clinicians on how to effectively integrate AI tools into their practice while maintaining the essential human touch in patient care. This will ensure that the unique qualities of human empathy, intuition, and cultural understanding are preserved.

Read more about The Human Touch: How AI Enhances, Not Replaces, Mental Health Support

Hot Recommendations

- Customized Sleep Schedules: AI Driven for Sustainable Rest

- Crafting a Personalized Productivity Plan for Mental Clarity

- Sustainable Self Compassion: Cultivating Kindness Towards Your Mind

- Sustainable Productivity Hacks for the Busy Professional

- Sustainable Wellness for Parents: Balancing Family and Self Care

- Data Informed Self Care: Designing Your Personalized Wellness Strategy

- Sustainable Wellness for a Purpose Driven Life

- AI Assisted Mindfulness: Personalized Meditations for Deeper Practice

- Building Inclusive Mental Health Services: Key Initiatives

- AI Powered Self Care: Customizing Your Routine for Maximum Impact